Why Your Organization Needs a Telemetry Pipeline

Every day, businesses generate an enormous amount of telemetry data—logs, metrics, and traces—that must be efficiently processed and analyzed. Without a structured way to handle this data, organizations risk drowning in noise, missing critical insights, and facing performance bottlenecks.

Despite its importance, many organizations lack the capability to properly manage their telemetry pipelines, leading to:

- Alert fatigue and delays due to excessive data

- High costs from data storage and processing

- Poor metrics and KPI’s because of inefficient monitoring

- Operational inefficiencies that impact system performance

A well-designed telemetry pipeline ensures that data flows efficiently, is filtered to reach the right tools, and is properly indexed and analyzed. In this blog, we’ll break down why your organization needs to build and manage telemetry pipeline, and how it can transform your telemetry management strategy.

What is a Telemetry Pipeline?

A telemetry pipeline is a structured system that collects, processes, and routes logs, metrics and traces from various sources to designated destination consumers. It provides telemetry fidelity, ensuring a comprehensive and reliable representation of the system or process being monitored. Data can be filtered, enriched, transformed, and forwarded to observability, monitoring, and data storage solutions.

Think of a telemetry pipeline as the central nervous system for your IT environment. Data comes in from all over — infrastructure, systems, applications, workloads — but if you don’t have an efficient way to route, process and analyze it, you become sensory overloaded. With a properly managed telemetry pipeline, Tavve aggregates that data, filters out the noise, and makes sure only the critical and necessary data reach your monitoring and observability tools.

Key Functions of a Telemetry Pipeline

- Data Collection: Gathers logs, metrics, and traces from multiple sources (infrastructure, systems, applications, workloads, etc.).

- Filtering & Processing: Cleans unnecessary data, removes redundancies, and structures information.

- Routing & Distribution: Sends the right data to monitoring tools, security platforms, or SIEM solutions (like Splunk, ELK, or Prometheus).

- Storage & Archival: Ensures compliance by storing critical logs while discarding irrelevant noise.

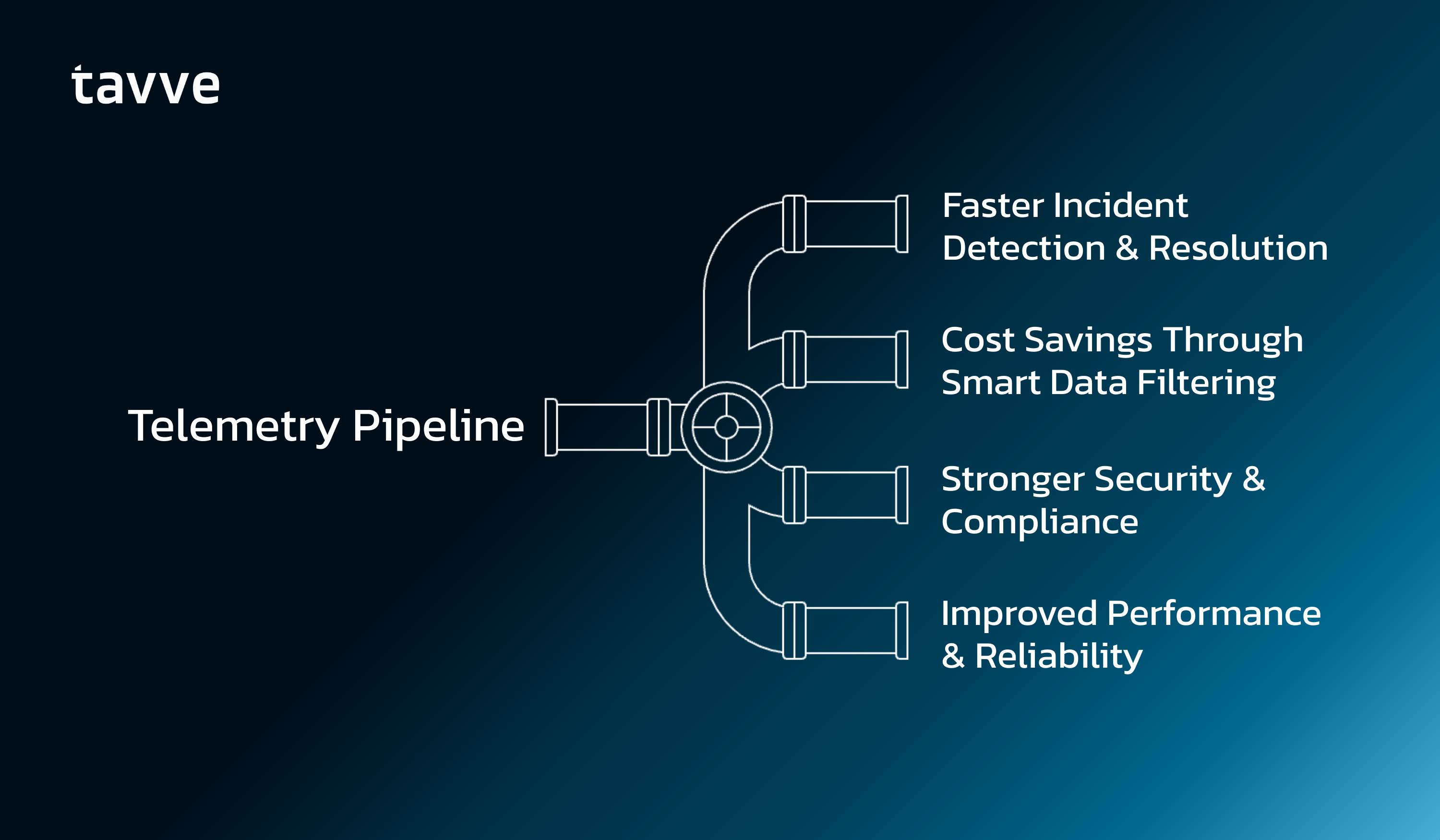

The Business Impact: Why Organizations Need a Telemetry Pipeline

1. Faster Incident Detection & Resolution

Without a telemetry pipeline, IT teams struggle with massive and disparate log data, often missing key anomalies until they escalate. A telemetry pipeline can reduce data noise and improve data fidelity, which minimizes alert fatigue and delay. This ensures that security breaches, system failures, and performance issues are identified and managed in near real-time.

Example: A global e-commerce company detected a DDoS attack 40 percent faster after implementing a telemetry pipeline that filtered and flagged unusual network utilization spikes.

2. Cost Savings Through Smart Data Filtering

Many organizations waste millions storing unnecessary telemetry data. Companies using solutions like Splunk pay on a consumption basis—meaning filtering out redundant or low-priority telemetry data can result in massive cost savings.

A customer of Tavve was sending terabytes of telemetry data to Splunk daily, and many other premise and cloud based tools. Much of the data was low value, information data that wasn’t being alerted on or used for correlation. Splunk, and many of their other monitoring tools, had inadvertently become a system of record for the organization.

PacketRanger’s smart filtering and flexible data forwarding helped the organization to reduce the size of their telemetry pipelines, saving the company millions in application license fees, compute resources, and WAN/LAN utilization.

3. Stronger Security & Compliance

Security teams rely on telemetry data to detect threats, track user behavior, and maintain compliance. A telemetry pipeline ensures that only the most relevant security data is prioritized, preventing system overload and improving response times.

Organizations tend to collect everything, but when everything is important, nothing is important. A properly managed telemetry pipeline lets you focus on the signals that matter—real security risks—not just noise.

Example: A bank using a telemetry pipeline reduced false positive security alerts by 60 percent, allowing analysts to focus on actual threats.

4. Improved Performance & Reliability

Telemetry pipelines allow organizations to spot network and application issues before they cause downtime. By visualizing and building a baseline for data flow, companies can identify peak usage trends, network congestion, and failing components before they affect end-users.

A customer of Tavve using PacketRangers pipeline visualizations saw a substantial and increasing data spike as the day progressed into their peak usage time. It turns out their core firewalls were left running in debug mode after a maintenance window, and flooded their monitoring and security systems with unnecessary log data. Without a system to manage their telemetry pipelines, they would have struggled to identify the offending devices.

Example: A cloud service provider used telemetry visualization to predict and prevent a major system failure, reducing downtime by 80 percent.

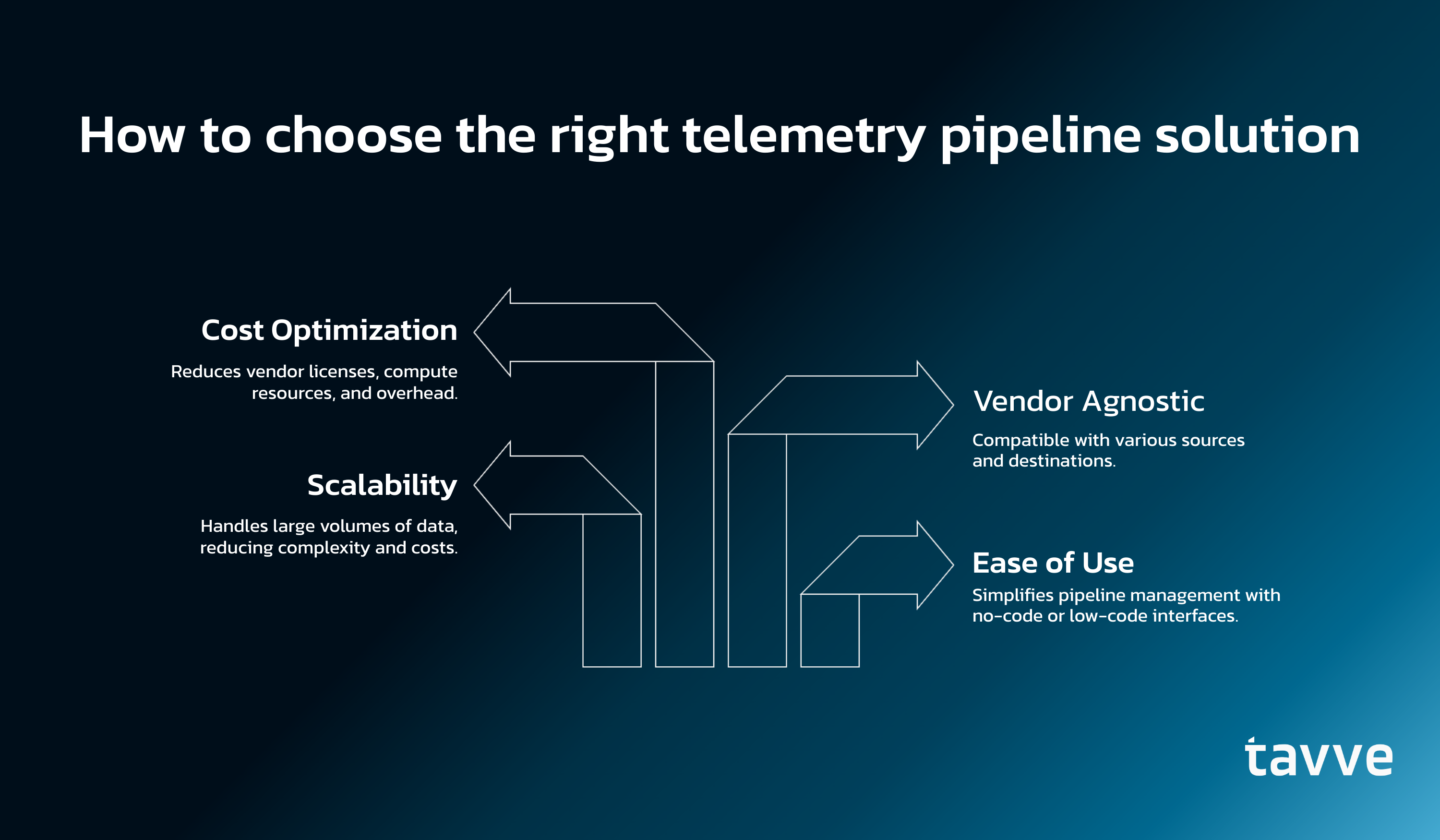

Choosing the Right Telemetry Pipeline Solution

When selecting a telemetry pipeline, organizations should look for solutions that offer:

- Ease of Use: The option for no-code or low-code interface to simplify pipeline management and routing.

- Scalability: Ability for each node to handle large volumes of telemetry data to reduce complexity and cost of administration.

- Vendor Agnostic: Is not limited to specific vendor’s sources or destination applications

- Cost Optimization: Capability to optimize data pipelines that reduces the costs of vendor license, compute resources, and administration overhead.

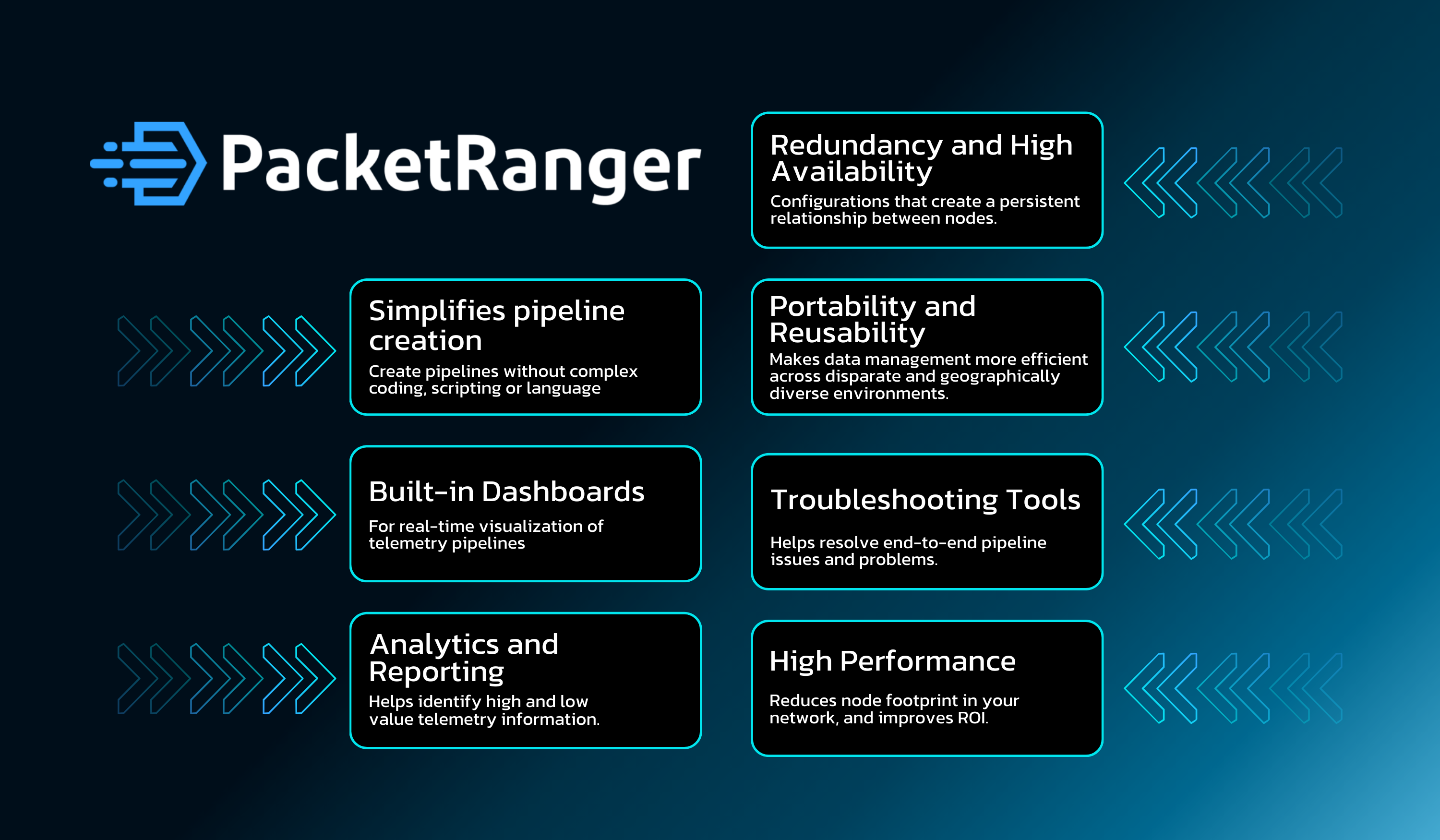

How PacketRanger Stands Out

- Simplifies pipeline creation (no need for complex coding, scripting or language, such as regular expressions, grok patterns or java evaluations)

- Built-in dashboards for real-time visualization of telemetry pipelines

- Analytics and Reporting that help identify high and low value telemetry information.

- Redundancy and High Availability configurations that create a persistent relationship between nodes.

- Portability and Reusability of configuration items making data management more efficient across disparate and geographically diverse environments.

- Troubleshooting Tools that help resolve end-to-end pipeline issues and problems.

- High Performance reduces node footprint in your network, and improves ROI.

Final Thoughts: Future-Proof Your Data Strategy

A telemetry pipeline is no longer a nice-to-have—it is a must-have for any organization that manages mission critical infrastructure, systems and applications. Whether you want to cut costs, improve IT operations, enhance system performance, or speed up incident resolution, a well-implemented telemetry pipeline is the key.

A telemetry pipeline isn’t just about managing logs, metrics and trace. It’s about running your business better. If your organization isn’t using a telemetry broker for managing your data pipelines, then you’re already behind.

If your organization is struggling with telemetry overload, alert delay/fatigue, or high costs, it is time to invest in a smarter telemetry pipeline solution like PacketRanger.

Ready to streamline your telemetry data? Contact us for a demo and see how PacketRanger can transform your IT operations.